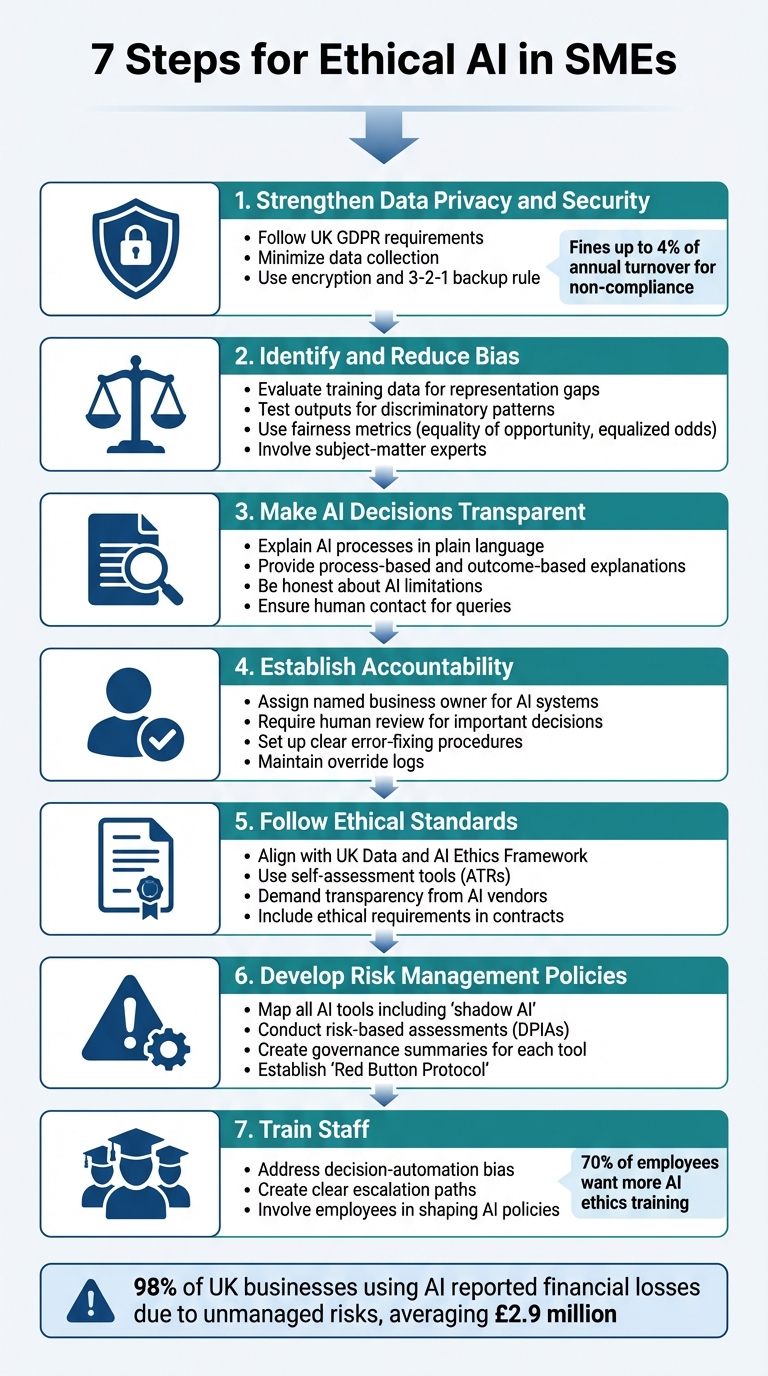

By 2026, using AI responsibly is no longer optional for small and medium-sized enterprises (SMEs) in the UK. Ethical AI practices help businesses avoid fines, protect customer trust, and maintain compliance with data protection laws. This guide breaks down seven practical steps to integrate ethical AI into your business operations:

These steps not only help SMEs stay compliant but also build trust with customers and partners. Ethical AI is about balancing innovation with responsibility, ensuring your business can thrive in a rapidly evolving landscape.

7 Steps for Implementing Ethical AI in Small and Medium Enterprises

Protecting customer data is the cornerstone of ethical AI. When handling personal information - like phone numbers or employee records - for training AI systems, you bear a legal responsibility to safeguard it. Under UK GDPR, personal data must be "processed lawfully, fairly and in a transparent manner". The stakes are high: non-compliance could result in fines of up to 4% of your annual turnover.

Before collecting any data, establish a lawful basis for doing so. The ICO offers a Lawful Basis Checker to help determine whether you need explicit consent, rely on contractual obligations, or justify processing under legitimate interests. Keep in mind that AI development often requires separate lawful bases for the training and deployment phases. If you're dealing with sensitive data - like medical records or information about children - you'll likely need to complete a Data Protection Impact Assessment (DPIA) first.

Stick to data minimisation principles by collecting only what’s absolutely necessary for your AI's intended purpose. Delete any temporary files, such as compressed transfer files, as soon as they’re no longer needed. Fortunately, compliance doesn’t have to break the bank: most small businesses in the UK only need to pay an annual ICO registration fee of £52 or £78.

Once you've ensured compliance with data protection laws, turn your attention to strengthening data security measures.

The UK GDPR mandates that you implement "appropriate technical or organisational measures" to protect personal data. For small and medium enterprises (SMEs), this means encrypting data, restricting access, and following the 3-2-1 backup rule: maintaining three copies of your data, stored on two different devices, with one copy kept off-site.

AI systems, however, come with their own set of risks that traditional security approaches might overlook. For example, a 2019 vulnerability in NumPy allowed attackers to execute remote code using disguised training data. To mitigate such risks, consider isolating your machine learning environment using virtual machines or containers. Additionally, apply API rate-limiting to prevent privacy attacks. Research has shown that attackers can reconstruct facial images from training datasets and match them to individuals with 95% accuracy, making these precautions crucial.

AI systems rely on data to learn, but if that data carries historical inequalities or fails to represent certain groups, the AI will mirror and perpetuate those unfair patterns. The Information Commissioner's Office (ICO) highlights this issue:

Any processing of personal data using AI that leads to unjust discrimination between people, will violate the fairness principle.

For small and medium-sized enterprises (SMEs), bias isn't just an ethical issue - it’s a legal one. Failing to address it could mean breaching UK GDPR and the Equality Act 2010. By tackling bias, you not only comply with legal standards but also promote fairness and build trust.

Bias often creeps in early, during problem formulation, when measurable proxies are defined. Choosing the wrong proxy can lead to skewed results. For example, a pneumonia risk model underestimated asthma patients' risks due to systemic intervention, leading to flawed predictions. To counter such issues, scrutinise your training data and regularly test your AI's outputs to detect and correct bias.

Start by assessing whether your training data fairly represents the groups your AI will impact. Imbalanced datasets - where certain demographics are underrepresented - cause the model to overlook patterns relevant to those groups. Simply removing sensitive data like race or gender won’t eliminate bias, as other variables, such as postcode or occupation, often act as "proxies" for these attributes, allowing discrimination to persist.

The ICO cautions:

You should not assume that collecting more data is an effective substitute for collecting better data.

Instead, bring in subject-matter experts and involve affected communities to identify gaps your team might overlook. If you’re using pre-built models or third-party datasets, demand detailed documentation on demographic coverage and fairness testing.

Even after addressing data imbalances, bias can emerge in the system's outputs. Monitoring should be an ongoing process, not a one-off task. Run your AI alongside traditional decision-making methods to compare outcomes and identify disparities before fully deploying it. Use fairness metrics like "equality of opportunity" (ensuring similar groups have equal chances of positive outcomes) or "equalised odds" (balancing true positive and false positive rates across groups).

A layered approach works best. Start by pre-processing data to improve representation, enforce fairness during model training, and adjust outputs where necessary. Incorporate human reviewers to check AI outputs for equity, but ensure they’re trained to avoid "automation bias" - the tendency to over-rely on AI decisions. Keep detailed records of instances where human decisions override AI outputs, including the rationale, to refine the model over time.

Being transparent with AI isn't just about admitting its use; it's about making its decisions easy to understand. If stakeholders can't grasp how AI reaches conclusions, trust begins to crumble, and accountability becomes a challenge. Transparency requires explaining the reasoning behind AI decisions clearly.

Under UK GDPR (Articles 13–15), organisations must provide "meaningful information about the logic involved" in automated decisions that significantly impact individuals. This builds on the earlier emphasis on data integrity by ensuring AI reasoning is both accessible and verifiable. Beyond meeting legal obligations, clear explanations help people spot mistakes, challenge unfair results, and adjust their actions for better outcomes. As the Information Commissioner's Office (ICO) points out:

Explainability can help to build trust by making it easier to understand decision-making processes, and easier to identify risks, inaccuracies and harms.

Technical jargon can alienate non-experts. Translate complex metrics into everyday language. It's important to distinguish between two types of explanations: process-based (how the system was created and operates) and outcome-based (why a specific decision was made). The latter is particularly crucial for building trust. By providing clear explanations, organisations can reinforce trust and demonstrate a commitment to ethical AI.

A layered approach works best: start with the essential details in plain terms, then offer deeper technical documentation for those who want to dive in further. Visual aids like diagrams, summary tables, or simple graphs can also help illustrate how various factors influenced the AI's output.

Explanation should feel like a dialogue, not just a one-sided information dump. The ICO highlights:

Individuals should not only have easy access to additional explanatory information... but they should also be able to discuss the AI-assisted decision with a human being.

Ensure there's a designated human contact available to clarify queries and review exceptions.

It's equally important to acknowledge what AI can't do. Machine learning results are based on statistical generalisations, not certainties. These systems identify patterns and correlations but don't prove causation. When using complex "black box" models like neural networks, it's crucial to admit that these models only approximate their logic.

Be upfront about the probabilistic nature of AI outputs, using clear indicators to communicate uncertainty. Train your staff to explain these limitations and to recognise cognitive biases, such as "decision-automation bias" (over-relying on AI results) and "automation-distrust bias" (rejecting accurate AI insights).

The ICO advises:

You should only use 'black box' models if you have thoroughly considered their potential impacts and risks in advance.

If such systems are necessary, document the reasoning behind their use, the trade-offs between performance and interpretability, and the methods for monitoring errors. Include procedures for human oversight, where reviewers verify the AI's findings and step in when necessary. Keep records of instances where human judgement overrides AI decisions, so the system can improve over time. By breaking down AI decision-making, SMEs can better uphold ethical practices and reduce the risks tied to opaque AI outputs.

After ensuring transparency in decision-making, the next step is to establish clear accountability. Transparency on its own isn’t enough - someone must take responsibility when AI systems make mistakes, which they inevitably will. This step shifts the focus from explaining how AI works to identifying who is responsible for its outcomes. According to the ICO, senior management must ultimately answer for AI governance. They cannot simply pass this responsibility to technical teams. The guidance further states that a designated senior role must have the authority to authorise or override AI decisions, prioritising accountability over technical expertise.

Every AI system should have a named business owner - often a Managing Director or an AI accountability officer - tasked with overseeing deployments, reviewing incidents, and maintaining records. This individual must also have the power to suspend operations if errors become frequent or severe. Additionally, organisations risk fines of up to 4% of their annual turnover for breaches of UK GDPR related to AI. By assigning clear ownership, businesses can ensure robust human oversight and a structured approach to handling errors.

Human oversight plays a vital role in maintaining ethical standards, especially for automated decisions that significantly impact individuals. Examples include credit approvals, hiring processes, and customer service outcomes. These decisions must undergo active human review. This means reviewers should critically assess AI outputs instead of blindly accepting them, which helps avoid automation bias. The ICO highlights:

Human intervention should involve a review of the decision, which must be carried out by someone with the appropriate authority and capability to change that decision.

To ensure effective oversight, assign knowledgeable and independent reviewers with manageable workloads. Keep an override log to document every human intervention and the reasoning behind it. Periodically conduct mystery shopping exercises to check whether reviewers are genuinely scrutinising AI decisions.

SMEs need well-defined processes for addressing AI errors quickly and effectively. This includes setting up escalation procedures and fallback systems - manual processes that can take over if an AI system fails or its accuracy declines. Document tolerance thresholds and use automated tools to identify decision-making issues. Additionally, provide clear communication channels for customers or individuals to query or challenge AI-driven decisions.

The ICO advises:

If grave or frequent mistakes are identified, you need to take immediate steps to understand and rectify the underlying issues and, if necessary, suspend the use of the automated system.

Regularly review data from contested decisions to identify problems like concept drift, where AI models lose accuracy as data patterns evolve. Use these insights to retrain models or adjust data collection methods. Draft all policies in plain English to make them easy to understand and encourage compliance. By putting these accountability measures in place, businesses show that ethical AI isn’t just about the technology itself - it’s about taking responsibility for its impact on people and society.

Once you've nailed down strong data security and transparency measures, the next step is to align your AI practices with recognised ethical standards. In the UK, this means adhering to frameworks like the Data and AI Ethics Framework, which is built around key principles such as Transparency, Accountability, and Fairness. These principles also help ensure compliance with UK GDPR and the Equality Act 2010 obligations.

Transparency, as highlighted by the Government Digital Service, involves openly and clearly communicating how your data and AI processes work. Meanwhile, accountability requires proper governance and oversight to keep everything in check. Tools like the Data and AI Ethics Self-Assessment Tool and the Algorithmic Transparency Recording Standard (ATRS) make it easier to turn these big-picture ideas into actionable steps without drowning in red tape. For a deeper dive, the ICO and Alan Turing Institute's guidance on "Explaining Decisions Made with AI" offers a framework to help you make AI-driven decisions more understandable for the people they affect.

When evaluating AI vendors, these standards are your best friend. Ask pointed questions about their training data, fairness testing processes, and performance metrics to assess compliance. The Government Digital Service emphasises:

Vendors of AI systems must be able to clearly explain the steps they take to build tools, the logic and assumptions built into their tools, and how their tools generate outputs.

Push for thorough documentation that covers everything from training processes to feature selection and algorithmic impact assessments. To keep vendors accountable, include ethical requirements in contracts through accuracy-based KPIs or Service Level Agreements.

Real-world examples show how this works in practice. Pilot projects led by major charities demonstrate that a cautious, well-documented approach can effectively manage the risks associated with AI. Beyond reducing legal and reputational risks - remember, the ICO can fine companies up to 4% of their annual turnover for AI-related GDPR breaches - following these standards can also help you stand out in a crowded market by building trust with your customers.

Once you've aligned with ethical standards, the next step is to put governance structures in place to manage AI risks as your business grows. This isn't about creating unnecessary red tape; it's about setting up clear, documented rules to ensure your AI is managed effectively. A 2026 study found that 98% of UK businesses using AI reported financial losses due to unmanaged risks, averaging £2.9 million. The good news? Most AI failures can be avoided with early risk identification.

Start by mapping all AI tools in use, including any unofficial 'shadow AI', and classify them based on their risk level. Tools with higher stakes, like those used for recruitment or credit decisions, require Data Protection Impact Assessments (DPIAs) under UK GDPR. On the other hand, tools with lower stakes, such as scheduling assistants, can operate with less oversight.

Focus your attention on systems that impact critical areas like customer interactions, finances, or employment decisions. These deserve the most scrutiny. Regularly monitor these systems - quarterly 'health checks' are a good practice - to catch issues like concept drift, where AI performance declines as data patterns change. This proactive approach helps you address problems before they spiral out of control.

Once you’ve assessed and prioritised risks, it’s time to formalise ethical guidelines for each tool.

Create a concise governance summary for every AI tool your business uses. This should include the tool's purpose, its data privacy requirements, and a clear assignment of accountability to a senior individual - not just a vague reference to "the team." Establish a 'Red Button Protocol' so employees know exactly who to contact if the AI produces biased, offensive, or incorrect outcomes. The Information Commissioner’s Office highlights the importance of accountability:

Accountability requires a named senior role who can authorise or override AI decisions, stressing decision authority over technical expertise.

Your policy should outline approved tools, prohibited activities (like uploading sensitive client data to public AI platforms), and the process for adopting new systems. For example, in August 2025, the British Heart Foundation piloted a generative AI project with safeguards such as limiting personal data in prompts, requiring human approval for external communications, and reviewing interaction logs monthly. This iterative approach allowed them to refine their rules based on practical experience while managing risks effectively within their resources.

Finally, integrate AI-specific risks into your existing company risk register instead of creating entirely new processes. This keeps things manageable while ensuring AI governance is part of your broader risk management strategy.

Having solid AI governance and risk management policies is a great start, but they only work when your team understands and actively applies them. People remain the biggest factor in AI-related risks - many incidents stem from employees unintentionally mishandling data or acting too quickly, such as uploading sensitive information into public AI tools. A survey revealed that 70% of employees feel their organisations should offer more training on the ethical use of AI technologies. Building a workplace culture that prioritises ethical AI use requires ongoing effort and open communication.

Here’s how you can focus on training and fostering an ethical mindset across your organisation.

Focus on "implementers" - the employees who interact with AI outputs daily. These individuals need to critically assess AI-generated results instead of blindly following them. Training should address two common pitfalls: decision-automation bias (over-relying on AI decisions) and automation-distrust bias (unfairly doubting AI capabilities).

It’s crucial to explain that machine learning operates on probabilities, not guarantees. Position AI as a tool to assist human judgement, not replace it. Practical training can make a real difference. For example, the Greater Manchester AI Foundry successfully trained 186 SMEs, leading to the creation of 67 new AI products. Set a clear timeline - such as a 60-day deadline - for staff to complete training on approved tools and data handling practices. You can also use free resources like IBM AIF360 or Microsoft Fairlearn to give employees hands-on experience in identifying and addressing algorithmic fairness issues.

This kind of training not only boosts technical skills but also reinforces ethical decision-making as part of everyday work.

Beyond technical training, empowering your team to make ethical choices is essential for reducing AI-related risks. One effective strategy is to involve employees in shaping AI policies. This helps address their practical concerns and increases buy-in. When people feel heard, they’re more likely to adhere to the rules.

Establish clear escalation paths so employees know exactly who to contact if they encounter biased or incorrect AI outputs. The Information Commissioner’s Office highlights the importance of this:

Accountability requires a named senior role who can authorise or override AI decisions, stressing decision authority over technical expertise.

Create an environment where raising ethical concerns is encouraged, not discouraged. Regular team discussions or dedicated forums can make ethical conversations a normal part of your workplace. And remember, ethical AI practices are no longer just about compliance - they’re becoming a commercial necessity. Clients and partners increasingly ask for evidence of AI governance during due diligence. By 2024, 31% of charities had already begun developing AI policies, proving that even organisations with limited resources see the importance of this work. Your employees are your first line of defence against AI missteps, so give them the tools and confidence to make sound ethical decisions every day.

Ethical AI isn't just a buzzword - it's a necessity for the long-term success of businesses, especially SMEs. The seven steps outlined here provide a straightforward plan to strengthen data privacy, reduce bias, ensure transparency, establish accountability, follow recognised standards, implement governance policies, and nurture an ethical workplace culture. These actions go beyond ticking legal boxes; they help build trust with customers, employees, and partners alike.

The stakes are high. UK GDPR breaches can lead to fines of up to 4% of annual turnover. Moreover, clients and partners are paying closer attention to AI governance during due diligence. This makes ethical AI practices not just a protective measure but also a competitive advantage. As aiforsmes.co.uk aptly puts it:

Law sets the floor; ethics raises the bar.

Taking proactive steps today is far more cost-effective than scrambling to fix issues later. Businesses that prioritise ethics now will also be better prepared for upcoming regulations, such as the EU AI Act.

A great example comes from the British Heart Foundation's 2024 generative AI pilot. Despite limited resources, the organisation managed AI risks effectively by setting clear use policies, requiring staff approval for external communications, and restricting personal data in AI prompts. This approach not only mitigated risks but also built confidence within the team. For SMEs, even small, well-documented steps can have a meaningful impact.

Ethical AI is about balancing innovation with responsibility. By adopting these seven steps, your business can build the trust, credibility, and resilience needed to navigate the evolving AI landscape. For tailored training and consultancy, visit Wingenious.ai: AI & Automation Agency.

You need to conduct a Data Protection Impact Assessment (DPIA) if your AI tool is likely to create a high risk to individuals' rights and freedoms. This becomes particularly crucial when you're dealing with personal data on a large scale or using new technologies.

DPIAs are designed to help you stay compliant with data protection laws while prioritising the protection of people's privacy.

Bias in AI systems becomes apparent when scrutinising the model's outputs and decision-making processes for indications of unfair treatment or unequal effects on particular groups. Methods such as pre-processing, in-processing, and post-processing are useful for assessing fairness across different demographics. Beyond this, examining the model's design assumptions, the choice of features, and how the problem is framed can reveal hidden biases. Including expert analysis and gathering feedback from stakeholders further strengthens this evaluation process.

Under the UK GDPR, a "meaningful" AI explanation means offering clear, straightforward reasons behind a decision, including its logic, in a way that's easy for individuals to grasp. This approach prioritises transparency and accountability, while also taking into account the specific context and how the decision affects individuals, as highlighted in guidance from the ICO. The explanation must provide enough detail to help people understand the process and reasoning behind the decision.

Our mission is to empower businesses with cutting-edge AI technologies that enhance performance, streamline operations, and drive growth. We believe in the transformative potential of AI and are dedicated to making it accessible to businesses of all sizes, across all industries.